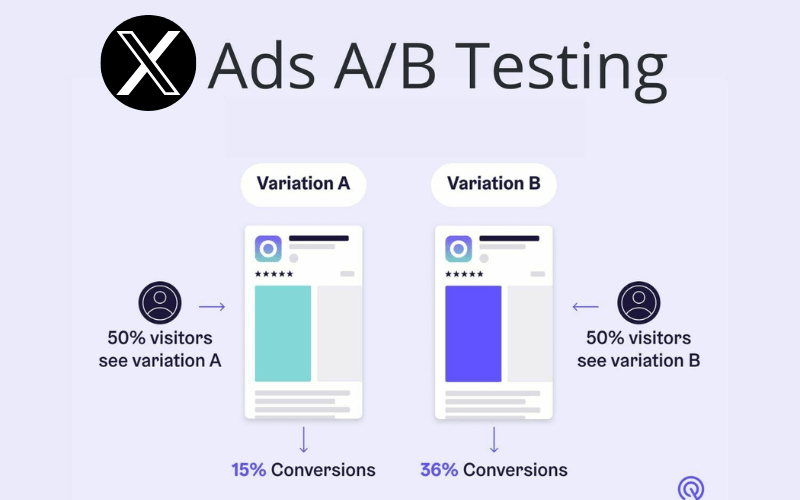

A/B testing allows brands to optimize Twitter ads by systematically comparing different creatives, messaging, targets, and placements. Applying a strategic approach to experimentation unlocks what resonates most with your audiences to drive engagement and conversions.

This comprehensive guide covers everything from developing test hypotheses to analyzing results to implement successful A/B Testing for Twitter Ads. Follow these best practices to improve campaign performance continuously.

Table of Contents

The Value of A/B Testing for Twitter Ads

What are the key benefits of incorporating A/B testing into your Twitter ads strategy?

Discover what resonates – Identify messaging, creative, targets, and placements that drive maximum engagement.

Increase conversions – Test different factors to determine what combo generates the most sign-ups, sales, etc.

Inform future campaigns – Apply learnings to create higher-performing ads from the start.

Continuously optimize – Use an iterative test-and-learn approach to keep improving over time.

Maximize ad spend – Boost engagement and conversion rates through refinement.

Take the guesswork out – Base decisions on data insights rather than assumptions.

Frequent A/B testing leads to better-performing Twitter ad accounts overall.

How to Develop Test Hypotheses

Creating hypotheses focused on potential areas for optimization guides your testing.

Tips for Developing Twitter Ads A/B Test Hypotheses:

- Review historical performance data – Look for high vs. low performer patterns.

- Analyze engagement metrics – Note which copy/creative/targets drive the most clicks or conversions.

- Identify pain points – Flag areas like high CPC or low CTRs to address.

- Listen to customer feedback – Feedback and surveys may surface winning concepts.

- Leverage intent data – Mine search trends and tweets to find resonant messaging.

- Monitor competitors – Test approaches working well for other brands.

- Consult Twitter rep – Reps have perspective on optimization best practices.

- Develop theories around beliefs – Challenge assumptions around ideal landing pages, ad lengths, etc.

Creating structured hypotheses upfront leads to more impactful experiments.

Structuring Effective A/B Tests

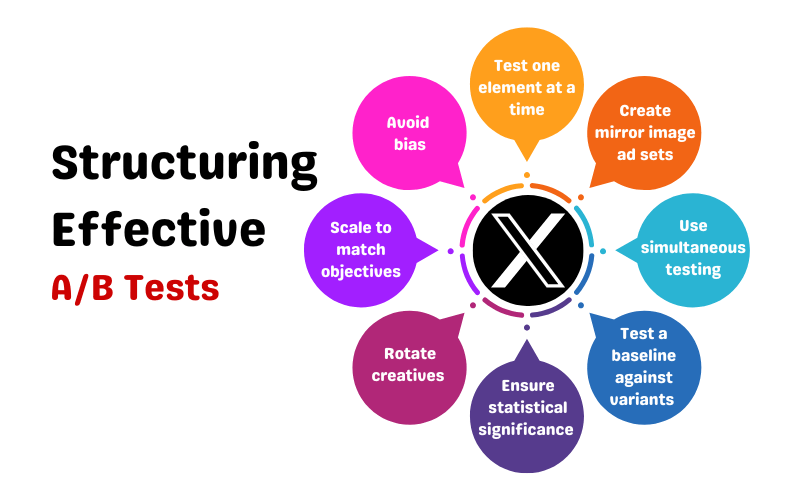

Proper test structure isolates specific variables and draws clear comparisons. Keep these tips in mind:

- Test one element at a time – For example headline or audience. Don’t test the headline, copy, and image simultaneously.

- Create mirror image ad sets – Keep everything, including budget and targeting, identical except one variable.

- Use simultaneous testing – Run tests concurrently instead of sequentially to collect data faster.

- Test a baseline against variants – See how new approaches compare to an existing control version.

- Ensure statistical significance – Allow tests to run sufficiently long to account for daily fluctuations.

- Rotate creatives – Show alternate versions evenly so ad fatigue doesn’t influence performance.

- Scale to match objectives – Tailor test duration and sample sizes to your goals.

- Avoid bias – Don’t make changes or stop tests prematurely before collecting full results.

Thoughtful structure and discipline in your testing methodology improve the integrity of learning.

Elements to Test for Twitter Ads

Many components can be tested individually to optimize Twitter ads. Consider experimenting with:

Creative: Images, video, emojis, illustrations

Copy: Headlines, descriptions, captions, calls-to-action

Messaging: Benefits emphasized, offers highlighted

Design: Colors, fonts, styles, logos, visual branding

Calls-to-Action: Clicks, conversions, downloads, sign-ups

Hashtags & Tags: Different hashtags or tagged accounts

Audiences: Interests, followers, lookalikes, etc.

Placements: Timelines, profiles, keywords, interests, etc.

Timing: Days, times, ad sequence, durations, etc.

Formats: Promoted tweets, accounts, video styles, etc.

Landing Pages: Lead gen forms, video pages, articles, etc.

Every element is an opportunity to drive lifts through iterative testing.

Creative Testing Approaches

Testing visuals and videos using different concepts, styles, and content is key to boosting Twitter ad performance.

Tips for Testing Twitter Ad Creative:

- Test lifestyle imagery vs. product-focused creative

- Try different emoji, illustrations or info-graphic styles

- Compare conceptual creative vs. branded creative

- Experiment with emotional appeals vs. rational appeals

- Test concise, single-value copy against a longer descriptive copy

- Use A/B image carousels with different rotating visuals

- Try square videos vs. vertical or horizontal on mobile

- Compare polished ads against natively Twitter-esque videos

- Test static images against animated GIFs or video

- Evaluate product demo reels against brand films or testimonials

Continue optimizing creative based on what visual styles and concepts resonate most.

Copy Testing Methods

By testing ad copy and messaging approaches, find the right words to convey your message.

Best Practices for Copy Testing:

- Test emotional copy against a rational, factual copy

- Try headlines focused on product benefits vs. audience benefits

- Experiment with different calls-to-action based on goals

- Evaluate conversational copy vs. formal advertising copy

- Compare long-form descriptive copy against short, pithy copy

- Test first-person narrative copy against third-person informational

- Try declaratory imperatives like “Do This Now” against softer suggestions

- A/B test descriptive adjectives like “award-winning” or “life-changing”

- Evaluate mentions of specific features vs. general capabilities

- Try teaser copy against a directly benefit-driven copy

Determine what copywriting style best suits your strategy and audience.

Hashtag and Tag Testing

Including relevant hashtags and tagging key accounts helps expand Twitter ad reach. Here are tips for optimizing them:

Hashtag Testing Ideas:

- Test branded hashtags vs. generic hashtags

- Compare product name hashtags to benefit-focused hashtags

- Evaluate widely used hashtags vs. creating new hashtags

- Experiment with different hashtag lengths like single word vs. phrase

- A/B test concise hashtags against longer, more descriptive options

- Try adding hashtags to copy vs. keeping copy hashtag-free

Tag Testing Tips:

- Test tagging complementary brands/influencers vs. competitors

- Compare tagging brand handles vs. individuals like founders

- Evaluate whether adding tags boosts engagement

- Analyze if excluded audiences differ based on tags

Leverage relevant tags to target engaged conversation themes and communities.

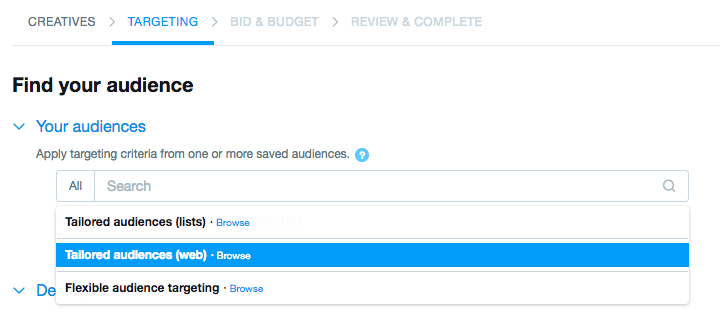

Targeting and Audience Testing

Dialing in ad targeting is crucial for messaging relevance. Ideas for tests include:

- Interests for your product vs. interests for key benefits

- Layered interests vs. narrow single interest

- Similar audiences from email lists vs. website visitor lists

- Lookalike audiences from customers vs. visitors

- Followers of competitors vs. complementary brands

- New followers vs. existing followers

- Keyword targeting turned on vs. off

- Manually excluded audiences vs. automatic exclusions

- Expanded location radius vs. tight postal code radius

- Targeting men vs. women for gendered products

Refine your targeting over time to identify groups most likely to convert.

Landing Page Testing

Optimizing landing pages can improve downstream conversions from Twitter ads. Test elements like:

- Long-form sales pages vs. quick lead gen forms

- Content pages focused on specific products/use cases

- Pages personalized with visitor name vs. generic pages

- Pages with demos or interactive elements vs. static pages

- Pages emphasizing certain benefits or offers over others

- Contrasting value propositions and headlines

- Varied call-to-action types and wording

- Different form lengths and number of fields

- Social share/follow options displayed prominently vs. not

Continual optimization of landing experiences beyond the initial ad click is essential.

Timing and Ad Sequence Tests

When and how you deploy ads influences performance. Try experiments like:

- Morning vs. evening or weekday vs. weekend timing

- Pacing fast vs. slow budget delivery

- On/off pulsing ads vs. always-on delivery

- Ad sequencing with video view, site visit, purchase events

- Series with intro, product, comparison, call-to-action ads

- Testing differing max frequencies caps (ex: 2 vs. 4 per day)

- Capping impressions vs. optimizing for conversions

- Launching campaigns pre-holidays vs. during vs. post-holidays

- Pausing poor performers quickly vs. letting them run Factor in seasonality, buying cycles, and intensities across your campaign calendar.

Testing Ad Formats

Compare how different Twitter ad formats perform for your specific offer or content. Potential tests:

- Square vs landscape video for first-view ads

- Promoted video ads vs. first-click free video ads

- Promoted tweets vs. promoted accounts

- Promoted trend ads vs. promoted moments

- Swipeable image carousels vs. single image ads

- Video vs. cinemagraph vs. large static image ads

- Try promoted collections ads vs. app card ads

Not all formats work equally well across objectives. Test which options deliver best for your goals.

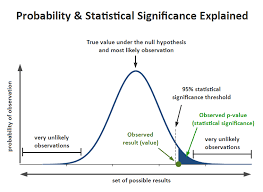

Statistical Significance in A/B Testing

Allow tests to run sufficiently long for results to be statistically significant.

Tips for Significance in Twitter Ad Testing:

- Set minimum timelines fitting your KPIs – 7 days for CTR and 14+ days for conversions.

- Use power calculators to determine sample sizes needed to identify lifts.

- Assess whether day-to-day variability is wider than the difference between groups.

- Ensure enough impressions are delivered to each branch.

- Calculate the confidence interval and know the margin of error.

- Check for overlap of confidence intervals between variants.

- Avoid stopping tests at random highs or lows.

Statistical rigour strengthens the integrity of your test analysis and learnings.

Analyzing Results and Identifying Winners

Follow best practices when analyzing A/B test results:

- Let tests run fully before evaluating data to avoid bias.

- Calculate lift by the percentage difference between the control and variables.

- Consider both rates and volume – a lower-performing option could still drive more overall conversions.

- Weigh both primary and secondary metrics like CTR and conversions.

- Segment data like device, gender, and audience to uncover insights.

- Evaluate statistical significance to check the margin of error.

- Check campaign settings to confirm mirror image delivery and budgets.

- Verify clear winner with inside and outside of confidence interval.

Proper analysis prevents incorrect conclusions and surfaces the combinations that truly lift performance.

Implementing Winning Combinations

Activate your learnings by rolling out winning creatives and targets.

Best Practices for Activating Twitter Ads Test Wins:

- Scale up the winning variation – Give more budget and the primary placement.

- Remove losing variations to avoid spending on poor performers.

- Apply findings across campaigns – Copy images, targets, etc., improving relevance.

- Build upon working base – Tweak and test again vs. starting completely fresh.

- Continue monitoring performance – Ensure lifts persist and iterate as needed.

- Create templates codifying what imagery, copy, and settings work.

- Share results across stakeholders – Arm everyone with key learnings to apply.

- Retest periodically as new audiences, objectives and trends emerge.

Continuous optimization depends on disseminating insights and standardizing what works.

Avoiding Common A/B Testing Mistakes

Be aware of these common pitfalls that compromise Twitter ad testing:

- Making too many changes at once in variables

- Not allowing sufficient time or budget for statistical significance

- Failing to isolate variables into mirrored campaigns

- Changing ads or budgets mid-test

- Ending tests early or not letting them run fully

- Assuming short test periods reflect long-term performance

- Focusing solely on click metrics rather than conversions

- Not testing a baseline against new ideas

- Testing with audiences too small to produce learning

- Trusting initial performance over sustained results over time

Disciplined methodology and patience are vital for valid Twitter ad A/B testing.

Tools for Managing A/B Tests

Specialized tools help execute and analyze Twitter ad A/B tests. Options include:

Google Optimize

Free Google extension for basic A/B test creation

Optimizely

Robust experimentation platform with advanced analytics

Adobe Target

Integrates testing into Adobe stacks

AgileCraft

Full-lifecycle agile experiment management

UpMetrics

Visual editor to build tests without engineering

AB Tasty

Enterprise testing solution with personalization

The right platform streamlines executing and reporting on simultaneous experiments.

Building a Testing Culture

Cultivate a continuous test-and-learn mindset across your marketing team through:

- Setting quarterly testing priorities – Identify key focus areas and knowledge gaps.

- Developing testing backlogs – Maintain a roadmap of ideas and hypotheses.

- Appointing testing champions – Recognize those driving important experiments.

- Sharing results broadly – Celebrate and memorialize what’s working in dashboards and team meetings.

- Incentivizing testing – Consider tying testing KPIs to performance management.

- Encouraging ideas from all team members – Innovation can come from anyone.

- Piloting advanced testing approaches like multivariate and sequential testing.

- Leveraging testing support – Utilize Twitter partner managers to strategize and troubleshoot experiments.

Promoting a culture of curiosity, inquiry, and optimization accelerates learning.

A/B Testing Twitter Cards

In addition to testing ad copy and visuals, test tweaks to the expandable Twitter Cards attached to your ads. Variables could include:

- Headline of card preview

- Description in card preview

- Type of card like summary, app or player cards

- Call-to-action buttons

- Card destination when opened – content vs lead gen form

- Image or video preview

- Amount of content and scrolling length

Twitter Card optimization provides another avenue to increase ad engagement.

Key Takeaways for Twitter Ads Testing

To recap, applying a systematic approach to A/B testing Twitter ads unlocks optimization through:

- Developing hypotheses around potential areas for lift

- Structuring valid isolated variable tests

- Trying experiments across creative, copy, targets and placements

- Ensuring statistical significance in your results

- Letting tests run fully before evaluating winners

- Activating learnings by implementing what performs best

- Avoiding common testing pitfalls

- Building a culture focused on continuous optimization

A/B testing allows brands to refine Twitter ads to maximize results cost-efficiently. Follow these tips and frameworks to incorporate an impactful testing methodology across your Twitter advertising programs.